- Dynatrace Community

- Dynatrace

- Ask

- Open Q&A

- Re: VIP address (or not) for Dynatrace Managed

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Pin this Topic for Current User

- Printer Friendly Page

VIP address (or not) for Dynatrace Managed

- Mark as New

- Subscribe to RSS Feed

- Permalink

04 May 2022 08:05 PM

In Dynatrace's documentation regarding Managed DNS configuration,

it points to a configuration where the DNS name resolves to all the node IPs.

There is no indication if it would be OK to have a VIP IP address in front of the cluster nodes, or not. Every new opinion I get or read, does not contribute to getting a more clear reply to this issue. Besides, from past posts in the Community, I believe the following should be referenced:

- https://community.dynatrace.com/t5/Dynatrace-Open-Q-A/Virtual-IP-address-to-Dynatrace-Cluster-Nodes/...

- https://community.dynatrace.com/t5/Dynatrace-Open-Q-A/For-Dynatrace-Managed-on-prem-Do-I-need-a-virt...

- https://community.dynatrace.com/t5/Dynatrace-Open-Q-A/Load-balancer-of-Web-UI/m-p/114238

@Radoslaw_Szulgomakes an important contribution, referencing the "/rest/health" endpoint - https://community.dynatrace.com/t5/Dynatrace-Open-Q-A/Load-balancing-with-single-ActiveGate-in-Manag...

Not immediately related, but with some good insight. - https://community.dynatrace.com/t5/Dynatrace-Open-Q-A/Managed-cluster-nodes-with-custom-domain-and-c...

More recent summary, with @Julius_Loman arguing for the load balancer approach

Now, some additional thoughts come to mind:

- OneAgents get a list of the node IPs and the cluster name, besides AGs. Each OneAgent will try to communicate with them all. Most of load-balancer implementations will prohibit direct communication with the nodes being balanced, so all the traffic will go through them. This has availability and performance concerns...

- What would be the best way for a Load Balancer to discover which Dynatrace nodes are down? The answer by @Radoslaw_Szulgo above is the only one I found. The only place it seems to be referenced in the documentation is for Premium HA though: https://www.dynatrace.com/support/help/setup-and-configuration/dynatrace-managed/fault-domain-awaren...

- If the nodes IPs are not routable, or cannot be directly accessed, it seems it wouldn't be possible to designate a unique node to be used for Web UI traffic: https://www.dynatrace.com/support/help/setup-and-configuration/dynatrace-managed/configuration/clust... Or would it be, if it then redirects between the nodes?

- While knowing that at the moment everything that communicates with Cluster nodes use TCP port 443, are there exceptions? It seems from https://www.dynatrace.com/support/help/setup-and-configuration/dynatrace-managed/installation/which-... that there might be some cases in implementations that have some years now.

- While load-balancers seem in theory to be a good way to balance traffic, it seems that Dynatraces relies on other ways to do this balancing. Might using a load balancer in some way "unbalance" Dynatrace nodes?

There are several reasons why a VIP might also be needed. In the case I'm dealing at the moment, one of them is that due to redundancy issues, nodes are on different networks, and some are not even routable to all OneAgents. This creates a heavier load on one node, because it's on the most "known"/routable network. The recent health dashboards have also given precious insight into this, and we have checked this on the OneAgent client side with oneagentctl.

Now, I would love to hear insight into this VIP/load balancer configuration, and if there are eventually other best practices in balancing traffic?

- Labels:

-

managed cluster

- Mark as New

- Subscribe to RSS Feed

- Permalink

05 May 2022 10:09 AM

Hi,

Did you explore network zones to limit your traffic? refer this below blog

- Mark as New

- Subscribe to RSS Feed

- Permalink

05 May 2022 10:16 AM

Good point, as I only slightly mentioned Activegates. I have used network zones in several cases, but in the particular one I'm referencing, we don't even use Activegates, as there is no advantage in using them, as all servers are basically in the same datacenter. Even with AGs/network zones, the same VIP issues would apply though.

- Mark as New

- Subscribe to RSS Feed

- Permalink

06 May 2022 11:33 AM

We had used a virtual load balancer in front of Dynatrace cluster nodes to access the tenant UI at one of our client managed environment. This load balancer had its own rules setup to point it to a single node when a specific context FQDNS was found. I hope if this can help you to do similar setup with custom load balancing based on request url context.

Example

dyantarce.abc.com - always going to node 1

- Mark as New

- Subscribe to RSS Feed

- Permalink

06 May 2022 12:34 PM

With the setup you mention, what would you say were SWOT points of it? Did the UI get faster? Any other issues that you noticed?

- Mark as New

- Subscribe to RSS Feed

- Permalink

06 May 2022 12:50 PM

Yes, tenant UI load time was better than the default one. The client had this requirement as there were service team constantly monitoring data and use tenant frequently for multiple load test results. We initiated this on lower environment and the results were impressive in terms of data load on UI.

- Mark as New

- Subscribe to RSS Feed

- Permalink

06 May 2022 07:22 AM

Hello @AntonioSousa ,

I think we have to separate the UI / API communication from the OA traffic. For OA it will only make things more complicated. Sure there are situations when not all nodes are reachable and a balance is not reached, but this should be either solved by using AG, opening that network routes or simply redesigning your Managed deployment.

For the UI / API part - it depends. Most enterprise customers I work with want and need to have a hostname in their domain and are using F5 or another reverse proxy mostly for centralized common access to Dynatrace and other applications. Anyway the NGNIX on the Managed Node will balance the UI/API traffic automatically for you for the best node. This UI/API traffic might end on a different node, depending on health. Look at the NGINX config on a Managed Node for inspiration.

I'd recommend:

- not touching the way how OA communicate unless there is really a special need for doing it differently

- Use reverse proxy for UI/API traffic if:

- customer requires the UI access to go through central reverse proxy (auditing purposes for example or network architecture)

- they require a custom DNS name for the managed cluster and they already have the SSL certificate management automated on the proxy (you can still keep the default *.dynatrace-managed.com domain on the cluster)

Typically I recommend not doing any VIP and sticking with the default configuration.

FYI there is the /rest/health URL path on the Managed Node to check for its status. This is the right method of checking status of a Managed Node.

Julius

- Mark as New

- Subscribe to RSS Feed

- Permalink

06 May 2022 10:17 AM

Thanks for all your insight. I have some doubts on some of the points:

- It's not easy to separate UI / OA traffic, as the same name is used by both. Additionally, OAs get a list of IPs, and of course all AGs (eventually of the same Network Zone).

- Balancing OA traffic on the Managed nodes seems an important point to me. I have one node kicking in ALR, while the others are way below in terms of load. This seems that regarding load balancing of the OA traffic, the ones making the decisions are the OAs, while in GUI, it's the server/NGINX distributing the load.

- Sticking in a VIP for OA traffic might have several inconveniences, but are they really an issue in a Dynatrace Managed architecture?

Of course, I could have a VIP and still let some OAs having direct connections. Just not sure if this would also not create an unbalance between the nodes...

- Mark as New

- Subscribe to RSS Feed

- Permalink

06 May 2022 11:50 AM

@AntonioSousa it is possible to handle it by setting the IP address in the CMC for each node. If you set it to the IP address, only this IP address will be propagated to agents. So you can force agents to connect to the correct IP. I'm just not sure if you can set an IP which is not local - I never tried that, but I guess it works.

The balancing of agent traffic between nodes - did you try to validate that using cluster metrics in the local selfmonitoring environment? It shows you also traffic per AG located on the cluster nodes. Then you can check if its really unbalanced or there is other reason (different HW for example).

I personally don't see any benefit to setup VIP for OA traffic. If there are network route issues - you can also use HTTP proxies to connect OA to desired endpoints if it solves your case.

- Mark as New

- Subscribe to RSS Feed

- Permalink

06 May 2022 12:32 PM

Yes, I have been using several dsfm metrics to check the unbalance. They have been great! I have also used oneagentctl to get the cluster nodes to which certain OneAgents are connecting, and that too has provided very good information. We then got to the point where we discovered that certain routes were not allowed...

There are of course multiple ways to solve it, and there are certainly much many other combinations that might occur elsewhere. The issue here is that Dynatrace only recommends a round-robin DNS setup, with no other documented 🤔

- Mark as New

- Subscribe to RSS Feed

- Permalink

06 May 2022 12:59 PM

OA communication does not use round-robin DNS, API/UI uses that.

There is a guide on how to setup load balancer in front of Managed but this is targeted for UI.

- Mark as New

- Subscribe to RSS Feed

- Permalink

06 May 2022 03:10 PM

There are some cases where OAs do use the round-robin DNS. The FQDN for the cluster is sent to OAs, and with oneagentctl I have seen some using them (marked with an asterisk). How OAs use which entry in the list they receive, is still a mystery to me...

- Mark as New

- Subscribe to RSS Feed

- Permalink

06 May 2022 05:32 PM - edited 06 May 2022 07:40 PM

@AntonioSousa I have never seen FQDN of the cluster pushed to oneagents. There is only one exception - if you set it using oneagentctl explicitly. Someone must have set this during installation or afterwards.

- Mark as New

- Subscribe to RSS Feed

- Permalink

17 Jun 2022 12:52 AM

- Dynatrace Cluster nodes and ActiveGates have a /rest/health endpoint. You could configure your load balancer with an HTTP health monitor that would check that endpoint. It returns RUNNING when it's up.

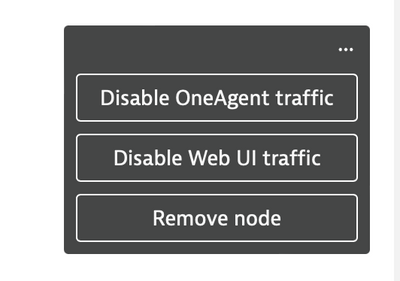

- Dynatrace Cluster nodes can be configured for UI traffic or OneAgent traffic. Out of the box they're configured for both.

- We have a VIP in front of our cluster nodes for two reasons. First it uses our corporate domain and second it allows us to adhere to our corporate SSL certificate policies. We do it with the understanding that NGINX is doing additional UI load balancing under the covers. We haven't experienced any unbalancing of our cluster, but we don't have the routing issues you appear to have in your environment. Are you trying to use a load balancer to address your routing issues?