- Dynatrace Community

- Dynatrace

- Ask

- Open Q&A

- Re: Problem with multiple Spark Submit processes/jvms

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Pin this Topic for Current User

- Printer Friendly Page

- Mark as New

- Subscribe to RSS Feed

- Permalink

08 Nov 2017 11:51 AM

Hi,

When availability monitoring is configured for the Spark Submit process group, and when 2 or more separate Spark processes/jvms are running (same server), and one stops, no problem is created.

Solved! Go to Solution.

- Labels:

-

process groups

- Mark as New

- Subscribe to RSS Feed

- Permalink

08 Nov 2017 02:03 PM

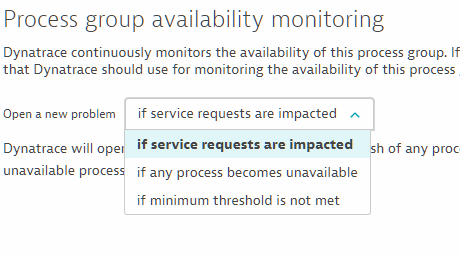

which kind of process group availability did you use.

- Mark as New

- Subscribe to RSS Feed

- Permalink

08 Nov 2017 02:29 PM

I used "If any process becomes unavailable"

Mike

- Mark as New

- Subscribe to RSS Feed

- Permalink

08 Nov 2017 02:33 PM

ok, the issue here is that when multiple processes for a process group are running on the same server they are normally treated as worker processes of the same "meta process" As such we do not alert if the number of workers goes from 2 to 1. Its an idea akin to threads. If you need to have this in a different way you need to give the different workers their own identity. look at the help on how to customize process group detection. The Node Id concept can be used to have multiple independent processes within the same group on the same server.

- Mark as New

- Subscribe to RSS Feed

- Permalink

08 Nov 2017 04:39 PM

Thanks Michael, I made the changes and can manage each process as 1 worker.