- Dynatrace Community

- Ask

- Dynatrace Managed Q&A

- Re: Cluster Node Elasticsearch storage service is malfunctioning ERROR

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Pin this Topic for Current User

- Printer Friendly Page

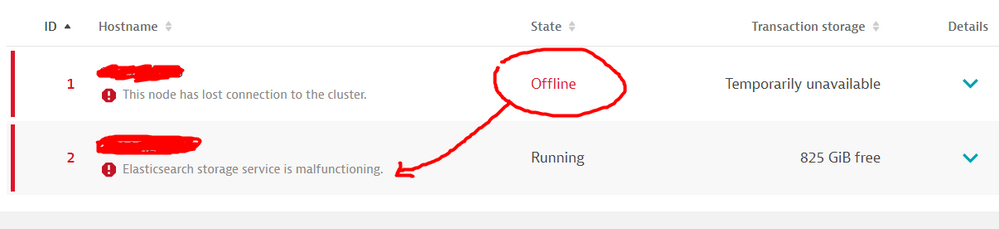

"Cluster Node Elasticsearch storage service is malfunctioning" error

- Mark as New

- Subscribe to RSS Feed

- Permalink

16 Mar 2023

08:12 AM

- last edited on

20 Mar 2023

03:31 PM

by

![]() Ana_Kuzmenchuk

Ana_Kuzmenchuk

Hi,

We had a one-node Dynatrace cluster and last week we added a node to our Dynatrace managed cluster so we had a cluster with two nodes. After three days we close the old node, and after 15 min. we saw this error: " Elasticsearch storage service is malfunctioning" in the deployment status screen. When I look at the detail on the node configuration the error looks like this: "Could not establish communication with Elasticsearch server on SERVER at location .....node ip .... ". At the same time, the cluster is working only on a new node and does not produce any problem when I open the old node. Please help me.

Thank you

- Mark as New

- Subscribe to RSS Feed

- Permalink

16 Mar 2023 08:19 AM - edited 16 Mar 2023 10:46 AM

Check the log files for Elastic on the nodes. A common scenario is that someone reloaded default firewall rules on the system level and blocked the communication between elastic nodes. Dynatrace has its own service for setting up iptables rules, it's not adding it to the default firewall service such as firewalld.

Try running

launcher/firewall.sh restart

on the node or reload the Dynatrace firewall rules using systemd:

systemctl restart dynatrace-firewall

- Mark as New

- Subscribe to RSS Feed

- Permalink

17 Mar 2023 07:13 AM

Hi @Julius_Loman when I check the elastic log file, I saw WARN like below ;

[2023-03-17T06:59:17,613 UTC][WARN ][o.e.c.c.ClusterFormationFailureHelper] [New_Node_IP_Adress] master not discovered or elected yet, an election requires a node with id [f9NXLfKiT3i2YTqpkr7UCA], have discovered [{New_Node_IP_Adress}{DWdyHwDUTyCySI2gKjASPQ}{9FcfKoqeRnOzax15qi3-Mw}{New_Node_IP_Adress}{New_Node_IP_Adress:9300}{dimr}, {OLD_Node_IP_Adress}{f9NXLfKiT3i2YTqpkr7UCA}{TmlH3Ea1RK2fCKMkKa3zyA}{OLD_Node_IP_Adress}{OLD_Node_IP_Adress:9300}{dimr}] which is a quorum; discovery will continue using [OLD_Node_IP_Adress:9300] from hosts providers and [{New_Node_IP_Adress}{DWdyHwDUTyCySI2gKjASPQ}{9FcfKoqeRnOzax15qi3-Mw}{New_Node_IP_Adress}{New_Node_IP_Adress:9300}{dimr}, {OLD_Node_IP_Adress}{f9NXLfKiT3i2YTqpkr7UCA}{TmlH3Ea1RK2fCKMkKa3zyA}{OLD_Node_IP_Adress}{OLD_Node_IP_Adress:9300}{dimr}] from last-known cluster state; node term 22, last-accepted version 1

- Mark as New

- Subscribe to RSS Feed

- Permalink

16 Mar 2023 10:52 AM

For our Dynatrace Managed Clusters - Cluster with two nodes is only transition from one to three. If you check documentation - you need at least 3 nodes .

| We recommend multi-node setups for failover and data redundancy. A sufficiently sized 3-node cluster is the recommended setup |

You can avoid many problems if you will expand your cluster to 3 nodes.

Regards,

Romanenkov Alex

- Mark as New

- Subscribe to RSS Feed

- Permalink

16 Mar 2023 12:06 PM

I think in this case @akerimd would like to migrate from one node to another node that is why they switched off the original node 3 days later. As far as I understand the new node still would like to connect to the original switched off node Elastic. @akerimd Am I correct?

Best regards,

Mizső

- Mark as New

- Subscribe to RSS Feed

- Permalink

16 Mar 2023 02:13 PM

Yes @Mizső you correct ,

Since we had to change the ip address of the old node, we wanted to add a second node and then close the old node, but after closing it we encountered these problems. For this reason I am not closing old node 😞

- Mark as New

- Subscribe to RSS Feed

- Permalink

17 Mar 2023 07:26 AM - edited 17 Mar 2023 07:30 AM

Firt of all, I would open a Support case for this.

Since you added a second node. it started to sync with the other one, being a cluster does that.

Apparently you did this to move to another node. ( I do not think for an IP change you would need this procedure btw)

I wonder how you "close the old node". You can't just "shutdown".

Since it's operating in cluster and syncing you need a more careful approach. You first need to disable it, and make sure it's no longer syncing with the cluster. It's described here: Remove a cluster node | Dynatrace Docs

Especially note the text:

Remove a disabled cluster node

Before proceeding with node removal, it's recommended that you wait for at least the duration of the transaction storage data retention period (up to 35 days). This is because while all metrics are replicated in the cluster, the raw transaction data isn't stored redundantly. Removing a node before its transaction storage data retention period expires may impact code-level and user-session data analysis.

Remove no more than one node at a time. To avoid data loss, allow 24 hours before removing any subsequent nodes. This is because it takes up to 24 hours for the long-term metrics replicas to be automatically redistributed on the remaining nodes.

And lastly, as already remarked here before, you run either 1 or 3 nodes cluster, where of course 3 is the most ideal 🙂

But that of course depends on the size of the environment and what you are using it for.

- Mark as New

- Subscribe to RSS Feed

- Permalink

21 Mar 2023 07:16 PM

The problem I'm having still continues, when I close the old node, I get an error on the 2nd node with the (dynatrace.sh stop) method as follows, please help.

Featured Posts