- Dynatrace Community

- Ask

- Container platforms

- Re: Webhook pods ephemeral storage issue in OpenShift

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Pin this Topic for Current User

- Printer Friendly Page

- Mark as New

- Subscribe to RSS Feed

- Permalink

02 Sep 2022

09:23 PM

- last edited on

15 Sep 2022

10:00 AM

by

![]() Ana_Kuzmenchuk

Ana_Kuzmenchuk

Hi Folks,

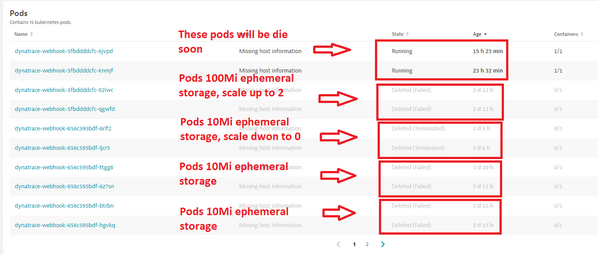

Last week we upgraded two OpenShift clusters Dynatrace operators from 0.2 -> 0.8.1. (Unfortunately, the client did not have the human resources to do it more frequently). It is a classicfullstack implementation (with operator hub) and with node selector limited only for the worker nodes. It seemed to go well, but after a few hours, the number of failed pods number started to slowly increase. We checked which pod is affected and turned out that Dynatrace-webhook pods failed within a few hours. When we observed it there were approx. 30 failed Dynatrace-webhook pods with the actual 2 running Dynatrace-webhook pods. Based on the following Kubernetes warn event it seems the ephemeral storage free space became 0%

"Pod ephemeral local storage usage exceeds the total limit of containers 10Mi."

We increased the webhook pods ephemeral storage limit to 10Mi -> 100Mi. The situation slightly changed. :-). Dynatrace-webhook pods survived more than a day but after that failed with the increased ephemeral storage too. + info these pods always started on Kubernetes infrastructure (role) nodes.

I do not know why these webhook pods started, I thought these type of pod required only for cloudenative and app only instrumentation.

Does anyone have any idea about this strange behavior of Dynatrace-webhook servers? Have you ever met this issue?

Thanks in advance.

Br, Mizső

Solved! Go to Solution.

- Labels:

-

kubernetes

-

openshift

-

webhook

- Mark as New

- Subscribe to RSS Feed

- Permalink

14 Sep 2022 04:45 PM

Hi Mizso,

we have the same problem at a customer of mine! We will open a support call.

Cheers Siegi

- Mark as New

- Subscribe to RSS Feed

- Permalink

14 Sep 2022 05:17 PM

I have also raised a ticket at support without any succes or solution... 😞

At that clinet there are three clusters. In sandbox and acceptance cluster we increased the ephemeral strorage from 10Mi to 100Mi at dynatrace-webhook pods. Since than at sbx environment has not had failed dynatrace-webhook pods (11 days), but unortunately at acceptance environment has had failed dynatrace-webhook pods within 2-4 day. In Porduction we increased the ephemeral strorage storage size from 10Mi to 200Mi in last week...we are waiting to the failure of pods...So I am a little bit confused about this story.

However I still not get answer from the support why do we need webhook pods at classicfullstack instrumnetation.

Br, Mizső

- Mark as New

- Subscribe to RSS Feed

- Permalink

15 Sep 2022 02:21 PM

You're right, it should not be needed at all in ClassicFullStack... as of DT Docs...

- Mark as New

- Subscribe to RSS Feed

- Permalink

15 Sep 2022 02:48 PM

It's a known issue and according to this issue on Github it can be fixed by removing the limit from the deployment.

- Mark as New

- Subscribe to RSS Feed

- Permalink

15 Sep 2022 02:56 PM - edited 15 Sep 2022 03:08 PM

Hi @Enrico_F

Thanks very much for the update. Deployment without limits is not allowed in this production environment. Client is going to live with this issue until the 0.9 release. We can monitor the failed pods with DT. 🙂

Br, Mizső

- Mark as New

- Subscribe to RSS Feed

- Permalink

05 Oct 2022 01:49 PM

Hi Folks,

Issue has been solved. Dynakube v.0.9.0 has beed released.

Release v0.9.0 · Dynatrace/dynatrace-operator · GitHub

Br, Mizső

Featured Posts