- Dynatrace Community

- Ask

- Alerting

- Re: Host Level Disk Anomaly Detection not being honored

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Pin this Topic for Current User

- Printer Friendly Page

- Mark as New

- Subscribe to RSS Feed

- Permalink

26 Oct 2021 07:19 PM

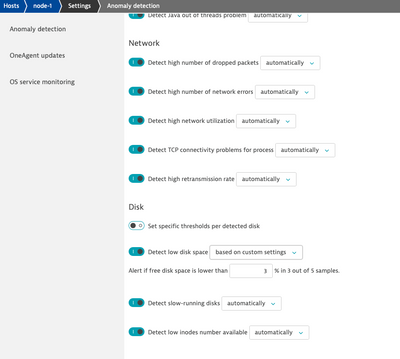

The oneagent was installed on a Linux instance which immediately triggered a low disk space problem on a specific filesystem. Custom global low disk space anomaly detection is configured in our environment, but the percentage being used is too restrictive for this particular host. Using the host settings I disabled "Use host group anomaly detection" and enabled "Set specific thresholds per detected disk" and created custom disk thresholds for the is host. The setting should have cleared the problem, but this did not immediately happen. I proceeded to manually close the problem, but it immediately re-opened indicating it was triggered by the global threshold and not the host specific threshold. I tried restarting the oneagent on the host, but that didn't seem to help. Is there some additional configuration that needs to be done to ensure the host specific anomaly detection configuration is honored?

Solved! Go to Solution.

- Mark as New

- Subscribe to RSS Feed

- Permalink

27 Oct 2021 01:20 PM

Do you change it here?

Sebastian

- Mark as New

- Subscribe to RSS Feed

- Permalink

27 Oct 2021 02:49 PM

That is the place, but I have "Set specific thresholds per detected disk" enabled and I set the threshold for a specific disk.

I also found that if I exclude the management zone that the host is a member of from the global settings the problem clears. I'm not sure if that is because the host level thresholds are being honored or if the 3% default is just being used. I could probably test that if you think it would help.

- Mark as New

- Subscribe to RSS Feed

- Permalink

27 Oct 2021 04:25 PM

I verified that breaching the host specific low disk threshold causes the problem to be triggered. After the problem was triggered I updated the global settings to include the management zone that the host with the host specific low disk threshold is a member of. I then the freed some space on the host so the usage fell below the host specific threshold, but still above the global low disk space threshold. The problem cleared that was triggered by the host specific threshold, but a new problem was created specifying the threshold used by the global setting. Putting the filter to exclude the management zone back on the global setting once again cleared the problem.

Featured Posts