- Dynatrace Community

- Ask

- Container platforms

- Kubernetes operator fails to start

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Pin this Topic for Current User

- Printer Friendly Page

- Mark as New

- Subscribe to RSS Feed

- Permalink

06 Oct 2023 09:47 AM

Hi!

I've tried to follow the quickstart guide for kubernetes and I'm stuck after the first part. I cant get the operator to start successfully. I'm on exoscale managed kubernetes running on v1.25.14. I've downloaded the generated `dynakube.yaml` file and I've executed the suggested script of kubectl commands. My operator keeps failing and restarting with the following log message.

{"error":"Timeout: failed waiting for *v1.Secret Informer to sync","level":"error","logger":"main.controller-runtime.source","msg":"failed to get informer from cache","stacktrace":"github.com/Dynatrace/dynatrace-operator/src/logger.logSink.Error

github.com/Dynatrace/dynatrace-operator/src/logger/logger.go:40

sigs.k8s.io/controller-runtime/pkg/log.(*DelegatingLogSink).Error

sigs.k8s.io/controller-runtime@v0.14.6/pkg/log/deleg.go:138

github.com/go-logr/logr.Logger.Error

github.com/go-logr/logr@v1.2.4/logr.go:299

sigs.k8s.io/controller-runtime/pkg/source.(*Kind).Start.func1.1

sigs.k8s.io/controller-runtime@v0.14.6/pkg/source/source.go:148

k8s.io/apimachinery/pkg/util/wait.runConditionWithCrashProtectionWithContext

k8s.io/apimachinery@v0.26.3/pkg/util/wait/wait.go:235

k8s.io/apimachinery/pkg/util/wait.poll

k8s.io/apimachinery@v0.26.3/pkg/util/wait/wait.go:582

k8s.io/apimachinery/pkg/util/wait.PollImmediateUntilWithContext

k8s.io/apimachinery@v0.26.3/pkg/util/wait/wait.go:547

sigs.k8s.io/controller-runtime/pkg/source.(*Kind).Start.func1

sigs.k8s.io/controller-runtime@v0.14.6/pkg/source/source.go:136","ts":"2023-10-06T08:21:10.515Z"}{"error":"Timeout: failed waiting for *v1.Deployment Informer to sync","level":"error","logger":"main.controller-runtime.source","msg":"failed to get informer from cache","stacktrace":"github.com/Dynatrace/dynatrace-operator/src/logger.logSink.Error

github.com/Dynatrace/dynatrace-operator/src/logger/logger.go:40

sigs.k8s.io/controller-runtime/pkg/log.(*DelegatingLogSink).Error

sigs.k8s.io/controller-runtime@v0.14.6/pkg/log/deleg.go:138

github.com/go-logr/logr.Logger.Error

github.com/go-logr/logr@v1.2.4/logr.go:299

sigs.k8s.io/controller-runtime/pkg/source.(*Kind).Start.func1.1

sigs.k8s.io/controller-runtime@v0.14.6/pkg/source/source.go:148

k8s.io/apimachinery/pkg/util/wait.runConditionWithCrashProtectionWithContext

k8s.io/apimachinery@v0.26.3/pkg/util/wait/wait.go:235

k8s.io/apimachinery/pkg/util/wait.poll

k8s.io/apimachinery@v0.26.3/pkg/util/wait/wait.go:582

k8s.io/apimachinery/pkg/util/wait.PollImmediateUntilWithContext

k8s.io/apimachinery@v0.26.3/pkg/util/wait/wait.go:547

sigs.k8s.io/controller-runtime/pkg/source.(*Kind).Start.func1

sigs.k8s.io/controller-runtime@v0.14.6/pkg/source/source.go:136","ts":"2023-10-06T08:21:10.515Z"}

Thanks

David

Solved! Go to Solution.

- Labels:

-

kubernetes

- Mark as New

- Subscribe to RSS Feed

- Permalink

30 Oct 2023 10:19 AM

I have, the same error when try to upgrade to dynatrace 0.14.1, anyone can help??

lab1-dynatrace-operator-749f55ccbf-wnplh lab1-dynatrace-operator sigs.k8s.io/controller-runtime@v0.16.3/pkg/internal/source/kind.go:56","ts":"2023-10-30T10:17:36.506Z"}{"error":"Timeout: failed waiting for *v1.Secret Informer to sync","level":"error","logger":"main.controller-runtime.source.EventHandler","msg":"failed to get informer from cache","stacktrace":"github.com/Dynatrace/dynatrace-operator/src/logger.logSink.Error

lab1-dynatrace-operator-749f55ccbf-wnplh lab1-dynatrace-operator github.com/Dynatrace/dynatrace-operator/src/logger/logger.go:40

lab1-dynatrace-operator-749f55ccbf-wnplh lab1-dynatrace-operator sigs.k8s.io/controller-runtime/pkg/log.(*delegatingLogSink).Error

lab1-dynatrace-operator-749f55ccbf-wnplh lab1-dynatrace-operator sigs.k8s.io/controller-runtime@v0.16.3/pkg/log/deleg.go:142

lab1-dynatrace-operator-749f55ccbf-wnplh lab1-dynatrace-operator github.com/go-logr/logr.Logger.Error

lab1-dynatrace-operator-749f55ccbf-wnplh lab1-dynatrace-operator github.com/go-logr/logr@v1.2.4/logr.go:299

lab1-dynatrace-operator-749f55ccbf-wnplh lab1-dynatrace-operator sigs.k8s.io/controller-runtime/pkg/internal/source.(*Kind).Start.func1.1

lab1-dynatrace-operator-749f55ccbf-wnplh lab1-dynatrace-operator sigs.k8s.io/controller-runtime@v0.16.3/pkg/internal/source/kind.go:68

lab1-dynatrace-operator-749f55ccbf-wnplh lab1-dynatrace-operator k8s.io/apimachinery/pkg/util/wait.loopConditionUntilContext.func1

lab1-dynatrace-operator-749f55ccbf-wnplh lab1-dynatrace-operator k8s.io/apimachinery@v0.28.3/pkg/util/wait/loop.go:49

lab1-dynatrace-operator-749f55ccbf-wnplh lab1-dynatrace-operator k8s.io/apimachinery/pkg/util/wait.loopConditionUntilContext

lab1-dynatrace-operator-749f55ccbf-wnplh lab1-dynatrace-operator k8s.io/apimachinery@v0.28.3/pkg/util/wait/loop.go:50

lab1-dynatrace-operator-749f55ccbf-wnplh lab1-dynatrace-operator k8s.io/apimachinery/pkg/util/wait.PollUntilContextCancel

lab1-dynatrace-operator-749f55ccbf-wnplh lab1-dynatrace-operator k8s.io/apimachinery@v0.28.3/pkg/util/wait/poll.go:33

lab1-dynatrace-operator-749f55ccbf-wnplh lab1-dynatrace-operator sigs.k8s.io/controller-runtime/pkg/internal/source.(*Kind).Start.func1

lab1-dynatrace-operator-749f55ccbf-wnplh lab1-dynatrace-operator {"level":"info","ts":"2023-10-30T10:17:36.647Z","logger":"main","msg":"Stopping and waiting for HTTP servers"}

lab1-dynatrace-operator-749f55ccbf-wnplh lab1-dynatrace-operator {"level":"info","ts":"2023-10-30T10:17:36.647Z","logger":"main","msg":"shutting down server","kind":"health probe","addr":"[::]:10080"}

lab1-dynatrace-operator-749f55ccbf-wnplh lab1-dynatrace-operator {"level":"info","ts":"2023-10-30T10:17:36.647Z","logger":"main.controller-runtime.metrics","msg":"Shutting down metrics server with timeout of 1 minute"}

lab1-dynatrace-operator-749f55ccbf-wnplh lab1-dynatrace-operator {"level":"info","ts":"2023-10-30T10:17:36.647Z","logger":"main","msg":"Wait completed, proceeding to shutdown the manager"}

lab1-dynatrace-operator-749f55ccbf-wnplh lab1-dynatrace-operator sigs.k8s.io/controller-runtime@v0.16.3/pkg/internal/source/kind.go:56","ts":"2023-10-30T10:17:36.547Z"}

- lab1-dynatrace-operator-749f55ccbf-wnplh › lab1-dynatrace-operator

- Mark as New

- Subscribe to RSS Feed

- Permalink

07 Jan 2024 11:03 PM

Hi All,

making an assumption on your configurations using proxy.

In operator 13.x, the operator introduces an Active Gate service on port 443 (not that it's documented), it used to be ignored to a certain extent until newer operators.

I've got similar errors this due to changes in the way that proxies are handled in the newer operators (14.x +), there was a 'fix' that Dynatrace put in place (I've requested documentation to be updated as part of our case, since it's not currently - Dynatrace Operator release notes version 0.14.2 - Dynatrace Docs).

To fix this, you need to apply the 'feature.dynatrace.com/no-proxy' and possibly the 'feature.dynatrace.com/oneagent-no-proxy' flags to allow for internal routing to occur to this service. Otherwise, what we found was that the pod connections would try to route through the proxy (which does not know about local services).

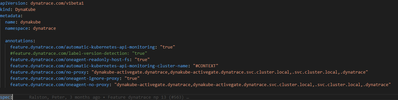

You can try updating your CRD similar to below

feature.dynatrace.com/no-proxy: "dynakube-activegate.dynatrace,dynakube-activegate.dynatrace.svc.cluster.local,.svc.cluster.local,.dynatrace"

feature.dynatrace.com/oneagent-ignore-proxy: "true"

feature.dynatrace.com/oneagent-no-proxy: "dynakube-activegate.dynatrace,dynakube-activegate.dynatrace.svc.cluster.local,.svc.cluster.local,.dynatrace"

hope this helps

- Mark as New

- Subscribe to RSS Feed

- Permalink

05 Jan 2024 05:28 PM

Hello

I was facing the same error as both of you when trying to deploy the operator v 13.x or 14.x

I can share with you my troubleshooting step and my findings, hope this can help you :

First I was trying to specify a proxy and increase ressources but no change.

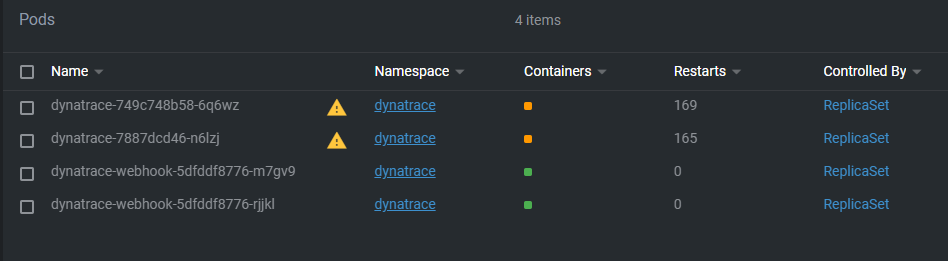

Looking at the status I saw a lot of restart :

Then I run this command :

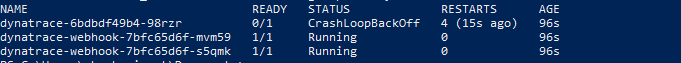

get pods -n dynatraceOperator stuck in a crashloop

I run this command :

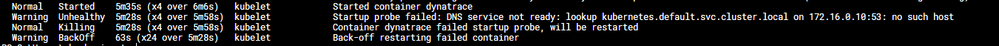

k describe po [dynatrace-operator-pod] -n dynatraceWe can see a DNS probe failed to lookup

In our kube cluster this is because the service is not available on cluster.local

I finnally managed to deploy successfully the operator on the cluster.

We use the lastest version v15.0 released on Dec 13, 2023 which perfectly resolved our issue :

- Fixed a DNS lookup issue in the startup probe for domains other than cluster.local

Hope this can help you.

Featured Posts