- Dynatrace Community

- Ask

- Container platforms

- Re: Limit Resource for OneAgent DeamonSet

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Pin this Topic for Current User

- Printer Friendly Page

- Mark as New

- Subscribe to RSS Feed

- Permalink

15 Nov 2023

06:15 AM

- last edited on

15 Nov 2023

01:45 PM

by

![]() MaciejNeumann

MaciejNeumann

Hi all,

We are integrating dynatrace with k8s version 1.20 using DeamonSet.

I see in the yaml file there is a resource limitation for Operator. What about DeamonSet?

Solved! Go to Solution.

- Labels:

-

kubernetes

- Mark as New

- Subscribe to RSS Feed

- Permalink

15 Nov 2023 08:25 AM

Hi,

You should be able to define the resource limits as we are deploying a DaemonSet (Not a custom resource like the operator).

An example of limiting resources can be found here in the Kubernetes documentation: https://kubernetes.io/docs/concepts/workloads/controllers/daemonset/

200Mi is probably not enough for the OneAgent - I'd go with similar limits that are recommended for the Operator.

- Mark as New

- Subscribe to RSS Feed

- Permalink

15 Nov 2023 08:55 AM

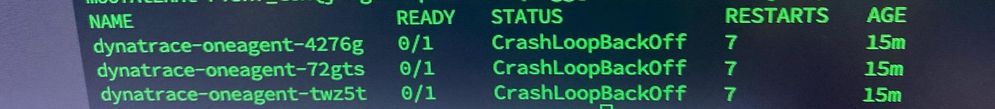

I try to it. But All Oneagent Pods don't working.

OneAgent Pods status is: CrashLoopBackOff

- Mark as New

- Subscribe to RSS Feed

- Permalink

15 Nov 2023 08:56 AM - edited 16 Nov 2023 07:25 AM

My dynatrace-oneagent.yaml:

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: dynatrace-oneagent

spec:

selector:

matchLabels:

name: dynatrace-oneagent

template:

metadata:

labels:

name: dynatrace-oneagent

spec:

hostPID: true

hostIPC: true

hostNetwork: true

nodeSelector:

beta.kubernetes.io/os: linux

volumes:

- name: host-root

hostPath:

path: /

containers:

- name: dynatrace-oneagent

image: dynatrace/oneagent

resources:

limits:

memory: 200Mi

requests:

cpu: 100m

memory: 200Mi

env:

- name: ONEAGENT_INSTALLER_SCRIPT_URL

value: https://env/e/700/api/v1/deployment/installer/agent/unix/default/latest?arch=<arch>

- name: ONEAGENT_INSTALLER_SKIP_CERT_CHECK

value: 'false'

- name: ONEAGENT_INSTALLER_DOWNLOAD_TOKEN

value: ''

args:

- '--set-network-zone=<your.network.zone>'

volumeMounts:

- name: host-root

mountPath: /mnt/root

securityContext:

privileged: true

- Mark as New

- Subscribe to RSS Feed

- Permalink

15 Nov 2023 08:57 AM

Hi, What is the crash reason? Very likely it runs out of memory at 200Mi - try to increase it to 500Mi or 1G

- Mark as New

- Subscribe to RSS Feed

- Permalink

15 Nov 2023 09:10 AM

Oh, I try to increase it to 500M but not running. I will try to increase to more memory. Lol

- Mark as New

- Subscribe to RSS Feed

- Permalink

15 Nov 2023 09:11 AM

You can describe the Pod to see what caused the failure.

- Mark as New

- Subscribe to RSS Feed

- Permalink

15 Nov 2023 09:21 AM

I see OneAgent Pods status is: CrashLoopBackOff

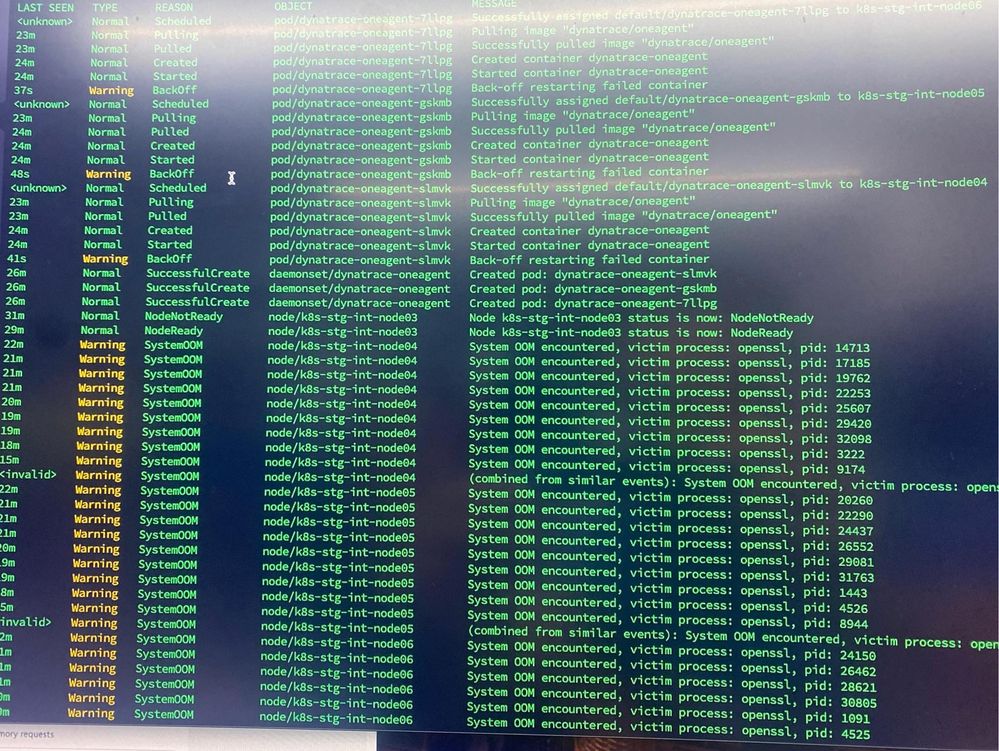

I try to get event and result as picture:

- Mark as New

- Subscribe to RSS Feed

- Permalink

15 Nov 2023 09:29 AM

Thanks. This indicates that either the container host operating system is running out of memory.

The Linux OOMKiller will designate a victim (in this case one of the processes from the OneAgent pods), and that causes the CrashLoopBackoff. So you have to check that your host has sufficient memory available - and then make sure that the limit for the OneAgent is high enough.

- Mark as New

- Subscribe to RSS Feed

- Permalink

15 Nov 2023 09:48 AM

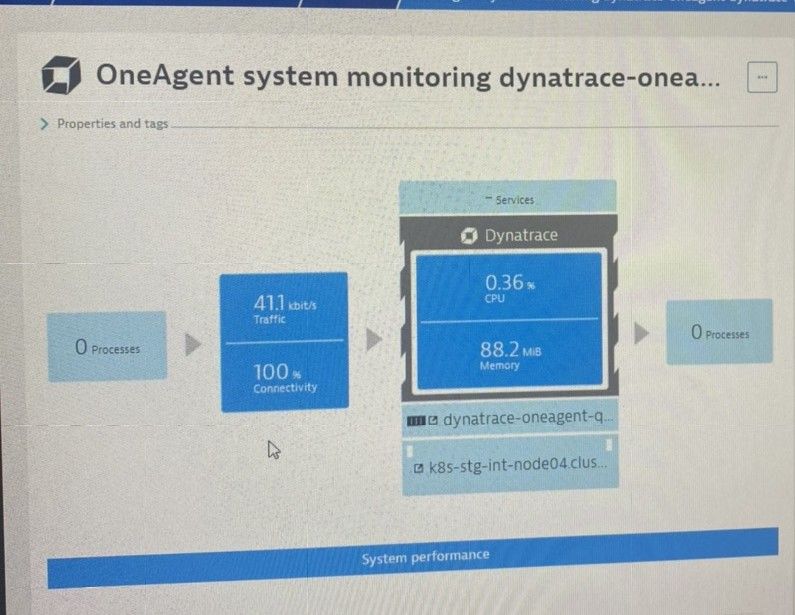

The K8s cluster have 3 master node & 3 worker node corresponding to 3 oneagent daemon. I see that 1 oneagent using a small amount of memory as picture:

- Mark as New

- Subscribe to RSS Feed

- Permalink

16 Nov 2023 01:42 AM

Sorry. Noted that: I use deamonset for deployment oneagent

- Mark as New

- Subscribe to RSS Feed

- Permalink

15 Nov 2023 08:57 AM - edited 16 Nov 2023 07:25 AM

My dynatrace-oneagent.yaml:

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: dynatrace-oneagent

spec:

selector:

matchLabels:

name: dynatrace-oneagent

template:

metadata:

labels:

name: dynatrace-oneagent

spec:

hostPID: true

hostIPC: true

hostNetwork: true

nodeSelector:

beta.kubernetes.io/os: linux

volumes:

- name: host-root

hostPath:

path: /

containers:

- name: dynatrace-oneagent

image: dynatrace/oneagent

resources:

limits:

memory: 200Mi

requests:

cpu: 100m

memory: 200Mi

env:

- name: ONEAGENT_INSTALLER_SCRIPT_URL

value: https://env/e/700/api/v1/deployment/installer/agent/unix/default/latest?arch=<arch>

- name: ONEAGENT_INSTALLER_SKIP_CERT_CHECK

value: 'false'

- name: ONEAGENT_INSTALLER_DOWNLOAD_TOKEN

value: ''

args:

- '--set-network-zone=<your.network.zone>'

volumeMounts:

- name: host-root

mountPath: /mnt/root

securityContext:

privileged: true

- Mark as New

- Subscribe to RSS Feed

- Permalink

16 Nov 2023 06:33 AM - edited 16 Nov 2023 06:34 AM

Hello @xuyenpn , removed full token from your reply.

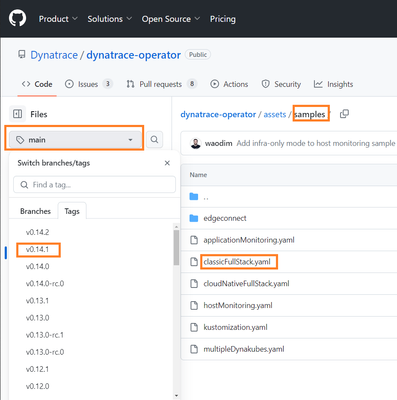

The limits are best set as defined by the Dynatrace operator's developers.

On the github, you can select the desired version and see the default limits in the example file dynakube

oneAgentResources:

requests:

cpu: 100m

memory: 512Mi

limits:

cpu: 300m

memory: 1.5Gi

You can easly check it for different versions in samples of dynakube.

You can find recommended operator version from documentation page.

Regards,

Alex Romanenkov

- Mark as New

- Subscribe to RSS Feed

- Permalink

20 Nov 2023 02:09 AM - edited 20 Nov 2023 02:10 AM

Thanks for your respond.

I removed full token from my reply.

Noted. I try to using DaemonSet for K8s version 1.16. Don't using Operator

Featured Posts