- Dynatrace Community

- Ask

- Open Q&A

- Re: Please Clarify: Self-Monitoring Metrics for Adaptive Capture Control - SaaS

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Pin this Topic for Current User

- Printer Friendly Page

- Mark as New

- Subscribe to RSS Feed

- Permalink

10 Feb 2022 09:54 AM - edited 10 Feb 2022 10:15 AM

@markus_pfleger (nice virtually meeting again after such a long time)

@christoph_hoyer

I was looking at the new Self Monitoring Dashboards based on what can be found in the Hub and what is presented in this webinar and in the documentation.

There seems to be conflicting information on how the capture rate is defined and even in the video it's done in different ways at two points I think.

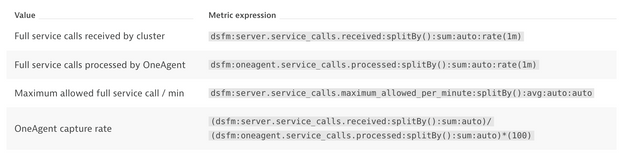

First there is the "OneAgent Capture Rate" as explained in the documentation:

The oneagent capture rate seems strange, as in my case the number for oneagent.service_calls.processed is much higher that the server.service_calls.received (see below). The resulting rate is therefore really low. Whereas the serverside Capture Rate is 100%.

The Server Side "Capture Rate" is calculated like this:

This one seems logical, server receives calls and persists them. If equal we are all good.

Since for SaaS there is also the server.service_calls.maximum_allowed_per_minute, does this mean for SaaS - assuming that the "processed" service calls will always be limited before to match the "persisted" so that the capture rate is always 100%?

What if the "received" calls exceed the "service_calls.maximum_allowed_per_minute"?

Would you consider this environment healthy? (I'm observing data loss and strange metric behavior on services)? I have added these metric calculations:

"Persisting Rate" = (dsfm:server.service_calls.persisted)/(dsfm:server.service_calls.received)*(100)

"Capture Rate" = (dsfm:server.service_calls.received)/(dsfm:oneagent.service_calls.processed)*(100)

"Percentage of Capture Limit" = (dsfm:server.service_calls.received:splitBy():avg:auto:rate(1m)/dsfm:server.service_calls.maximum_allowed_per_minute:splitBy():avg:auto)*100

For SaaS I'm interpreting this as:

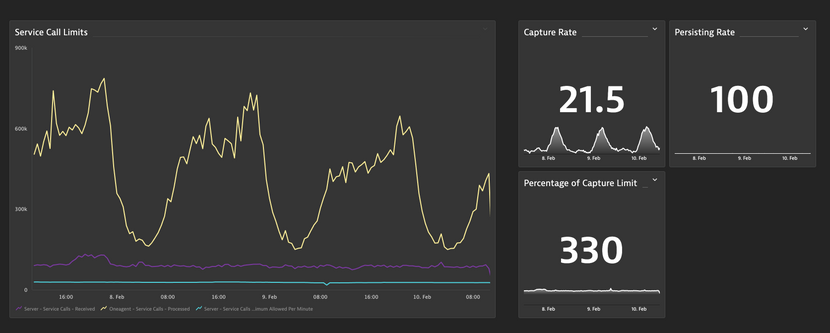

As the "oneagent.processed" (yellow) service calls is much higher than the "server.received" something is dropped somewhere, not considered at all, or the "oneagent.processed" metric includes calls that are not relevant?

Note that the Persiting Rate is constant 100% although the Capture Rate fluctuates depending on the load. Hence my assumption something gets dropped before the received metric is calculated (server side drop, rate limit).

And then there is the "server.service_calls.maximum_allowed_per_minute" which I understand should be compared to the "server.service_calls.received" metric to understand if (in SaaS) you produce more service calls than your limit allows. The "Percentage of Capture Limit" metric calculation in my case would tell me that I'm 330% over my limit - which would explain the "data-loss" or heavy aggregation I'm seeing on individual services.

Can you confirm this or am I missing something?

Solved! Go to Solution.

- Labels:

-

self-monitoring

- Mark as New

- Subscribe to RSS Feed

- Permalink

04 Jun 2022 03:25 AM

@r_weber were you able to get any clarity on this?

- Mark as New

- Subscribe to RSS Feed

- Permalink

26 Apr 2023 01:15 PM

I come here because I have the same question regarding Dynatrace Managed.

To me it is also unclear where the "server.service_calls.maximum_allowed_per_minute" is configured. If I have a current max of 5.4k, where does that come from?

- Mark as New

- Subscribe to RSS Feed

- Permalink

28 Apr 2023 12:40 PM

This metric is not appliable to Managed, we need it for ATMv2 in SaaS and is not used in Managed.

- Mark as New

- Subscribe to RSS Feed

- Permalink

01 May 2023 07:26 AM

Thanks for the confirmation and explanation.

"server.service_calls.maximum_allowed_per_minute" algorithm is 250 per HostUnit connected to the environment (HostUnits * 250), with a minimum of 5000.

If not applicable to Managed (and not limiting traffic), it is at least a metric that can be used in case migration to SaaS is considered. 🙂

- Mark as New

- Subscribe to RSS Feed

- Permalink

28 Apr 2023 12:39 PM

Recently our documentation got updated, I think it is a good read which should cover your questions. https://www.dynatrace.com/support/help/observe-and-explore/purepath-distributed-traces/adaptive-traf...

Nevertheless I also want to summarize:

dsfm:oneagent.service_calls.processed

This metric represents all service calls OneAgent processes before any sampling (Adaptive Traffic Management) is applied. Based on the customers license we may not send all the data to the cluster. This means we sample data and OneAgent does not send all tracing data to the cluster. The data we send to the cluster contains information about sampling to be able to extrapolate the data the on the cluster.

dsfm:server.service_calls.received

Now the processing on the cluster takes place. In some overload situations we keep the cluster healthy by sampling data (Adaptive Load Reduction). If this happens we process less data then we receive and the received metric is higher then the processing metric.

dsfm:server.service_calls.processed

After processing the data, the data gets persisted. Processing and persisting are separated therefore it could also happen in certain overload scenarios that the processed and persisted metric are not equal. Also they are written at different points in time so they have not the exact values but the sum over time is typically the same.

dsfm:server.service_calls.persisted

In the end we have the limit metric, representing the limit the customer is allowed to send based on the license. So if the customer sends too much, OneAgent lowers the sample rate, resulting in sending less data. Then we receive only the amount of service calls the customer is allowed to send.

dsfm:server.service_calls.maximum_allowed_per_minute

- Mark as New

- Subscribe to RSS Feed

- Permalink

01 May 2023 07:40 AM

Thank you @Andreas_Gruber, this is very informative. The documentation is a good starting point but it lacks somewhat this kind of detail.

Now I am interested in more detailed explanation about how the sampling if effected (Adaptive Traffic Management and Adaptive Load Reduction). What is the rate, what determines/limits the rate, and what can be done to (positively) influence the rate (with examples), e.g. what is the effect of adding a node, or raising a threshold. To aid customers in capacity planning and application impact analysis. But that is another topic, article, I guess. Or is there already an article somewhere that I overlooked?

Featured Posts